The landscape for engineering and design consultancies is rapidly evolving. Traditional value propositions—rooted in precise drafting and detailed design—are being challenged by increasingly tech-savvy clients and commoditized markets. The arrival of predictive analytics consulting and digital twins is transforming how firms deliver value. Leaders at engineering firms must consider how to transition from transactional design to strategic, data-driven services. With the right engineering AI strategy, consultancies can unlock new growth and relevance by shaping insights that both protect assets and drive cost efficiencies for their clients.

Evolving from CAD Services to Data-Driven Insights

For decades, the core offering of most engineering and architectural consultancies has been to deliver accurate Computer-Aided Design (CAD) services. But as advanced drafting and modeling tools become more accessible, and offshoring pushes down costs, these services are increasingly viewed as commodities. The result: margins thin, and differentiation becomes ever more elusive.

At the same time, clients’ expectations are growing. Facility owners, operators, and investors now expect their engineering partners to deliver ROI projections, operational risk analyses, and actionable scenarios for future-proofing assets. Embedding predictive analytics into your services allows you to anticipate equipment failures, optimize asset lifecycles, and model future costs with new precision. Digital twins—dynamic models that mirror real-world structures and systems—are at the heart of this analytics shift. They enable consultancies to transition from static design delivery to ongoing, outcome-focused problem solvers.

With market forces accelerating the adoption of digital twin predictive maintenance and simulation, engineering firms who build robust analytics offerings are better positioned to secure long-term client relationships—and new, recurring revenue streams.

Data Foundations: Aggregating Design, IoT, and Maintenance Data

Implementing predictive analytics consulting hinges on having clean, comprehensive data. Engineering data is scattered across BIM/CAD files, IoT sensor feeds, and maintenance logs—often siloed and inconsistent. To develop accurate predictive models, the first strategic move is to create a unified data foundation.

This typically begins with integrating historical CAD and BIM project files with streaming data from IoT devices; for example, sensors tracking energy use, temperature, vibration, or occupancy. Maintenance records, warranty information, and operational logs provide the ongoing context required to relate design intent to real-world performance.

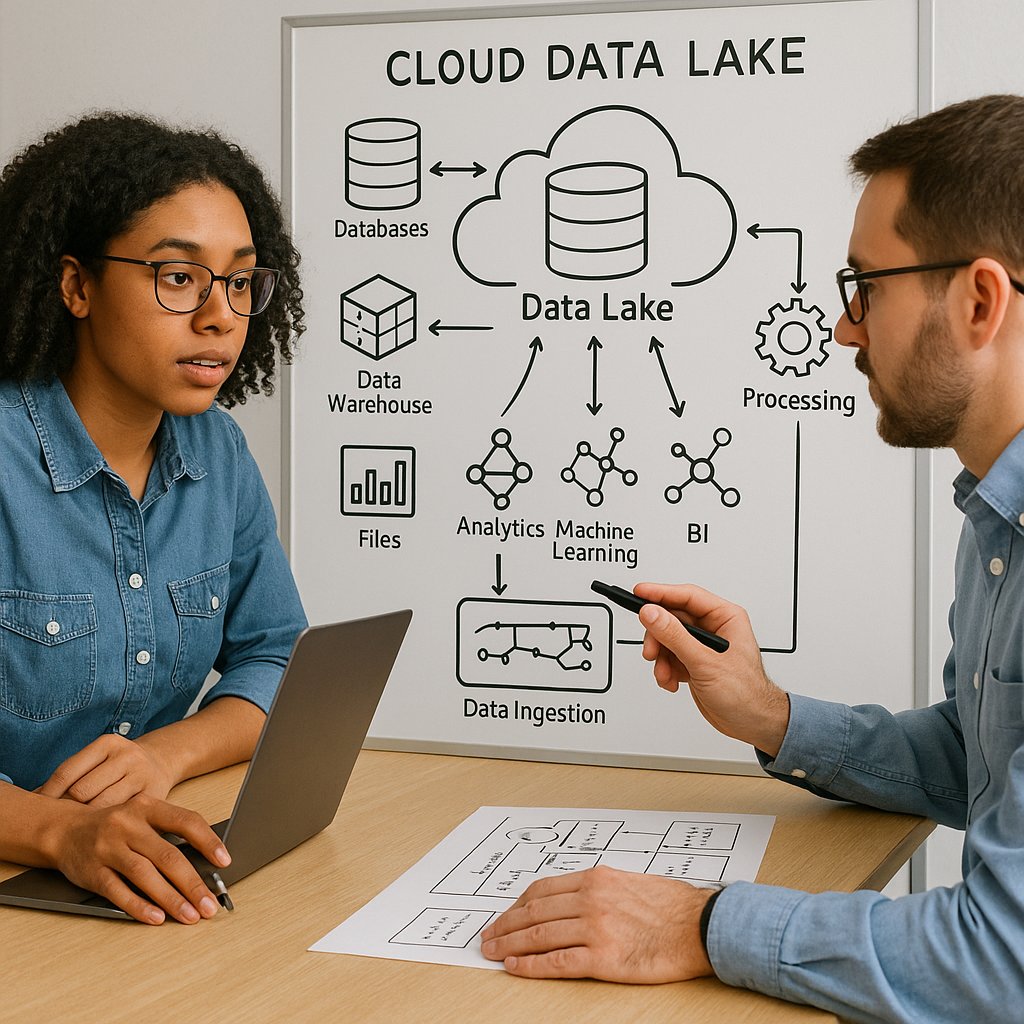

A key challenge is data quality. Files may be formatted differently, units may not align, and sensor data might contain gaps or noise. Normalizing this data—standardizing formats, correcting errors, and filling gaps—is essential for robust modeling. Advanced firms are turning to cloud-based data lake architectures, centralizing structured and unstructured data at scale, while allowing for flexible querying and analytics access. Establishing this foundation enables rapid prototyping of predictive models and shortens time to deployment in live client settings.

Developing and Operationalizing Predictive Models

Once the data layer is established, the next challenge is to develop, validate, and operationalize predictive models tailored to your clients’ business outcomes. The process begins with careful feature engineering—identifying which design parameters, IoT signals, and historical maintenance records best predict the outcomes your clients care about. For example, features might include valve size from design data, vibration readings from sensors, and repair event frequencies from maintenance logs.

Models range from time-series forecasts (predicting when equipment may need service) to anomaly detection (identifying unusual operating patterns that signal risk). As models mature, firms must decide on the architecture for deploying insights: performing inference directly on edge devices (for real-time alerts), or in the cloud (enabling higher-complexity models and cross-asset benchmarking).

Crucially, model explainability is paramount when introducing predictive analytics into engineering workflow. Clients, many of whom are non-technical stakeholders, need clear, transparent rationales for every insight. Explainable AI methods—such as Shapley values or decision-tree visualizations—help build trust and ensure buy-in from clients’ operations, finance, and executive teams.

Packaging Insights as New Revenue Streams

Transforming predictive models into monetizable offerings requires new business models and go-to-market strategies. Instead of one-time project fees, leading firms are launching value-added subscription dashboards that continuously update clients with health scores, risk flags, and optimized maintenance schedules across assets. These platforms can be tailored with role-based access and customized reporting to deepen client engagement.

Another powerful approach is outcome-based pricing, where consulting fees scale with proven improvements—such as a reduction in downtime or maintenance costs. By aligning incentives with client outcomes, consultancies become strategic partners instead of mere service providers. Engineering AI strategy coupled with digital twin predictive maintenance can be articulated directly in these pricing models, placing client goals at the center of the relationship.

The payoff is significant. Firms able to reduce clients’ annual maintenance costs by 10-20%, extend equipment life, or minimize downtime, can command premium positioning. As engineering decision-makers seek partners who proactively manage risk and deliver operational savings—not just drawings—consultancies that embrace predictive analytics will stand out for years to come.

Sign Up For Updates.