As CIOs and Heads of Risk in banking weigh the promise of large language models against strict regulatory expectations, prompt engineering emerges as the single fastest lever for delivering safe, measurable GenAI impact. Prompt engineering banking initiatives translate high-level model risk controls into operational rules that developers, compliance teams, and line managers can use immediately. This guide gives a pragmatic, risk-aware framework for launching a first wave of GenAI applications that move the needle on efficiency without increasing exposure to model incidents.

Executive brief: Why prompt engineering matters in regulated finance

Regulators are increasingly focused on model risk, explainability, and governance. At the same time, banks are under pressure to reduce cost-to-serve and speed up critical decision cycles. That intersection creates a clear mandate for a measured GenAI approach: capture fast wins by optimizing prompts rather than chasing immediate model retraining or multiple vendor swaps. Prompt patterns are the lowest-friction way to improve output quality, constrain hallucination, and standardize behavior across copilots and internal agents.

Early, high-impact use cases are practical: an employee copilot that answers policy Q&A with citations, KYC/AML summarization that preserves audit trails, or compliance drafting assistants that surface relevant policy sections. These deliverables reduce cycle times and improve first-pass quality while keeping model risk visible and controllable.

Risk-aware prompt design principles for banks

Translating model risk policy into prompt rules starts with specificity. In regulated environments, ambiguity is the enemy. Prompts must contain explicit instructions for tone, task scope, and refusal criteria so the model understands when to decline a risky request. Require source attribution by default and penalize fabrication in your evaluation criteria. That means building prompts that force the model to return citations or an empty answer rather than inventing content.

Another effective technique is schema enforcement. Constrain outputs to machine-parseable formats such as strict JSON schemas or domain glossaries so downstream systems can validate content automatically. Schemas reduce ambiguity, make auditing easier, and let you detect deviations programmatically. Finally, incorporate domain-specific checks—glossary-enforced terminology, numeric tolerances for financial figures, and mandatory signature lines for compliance memos—to align prompts with operational controls.

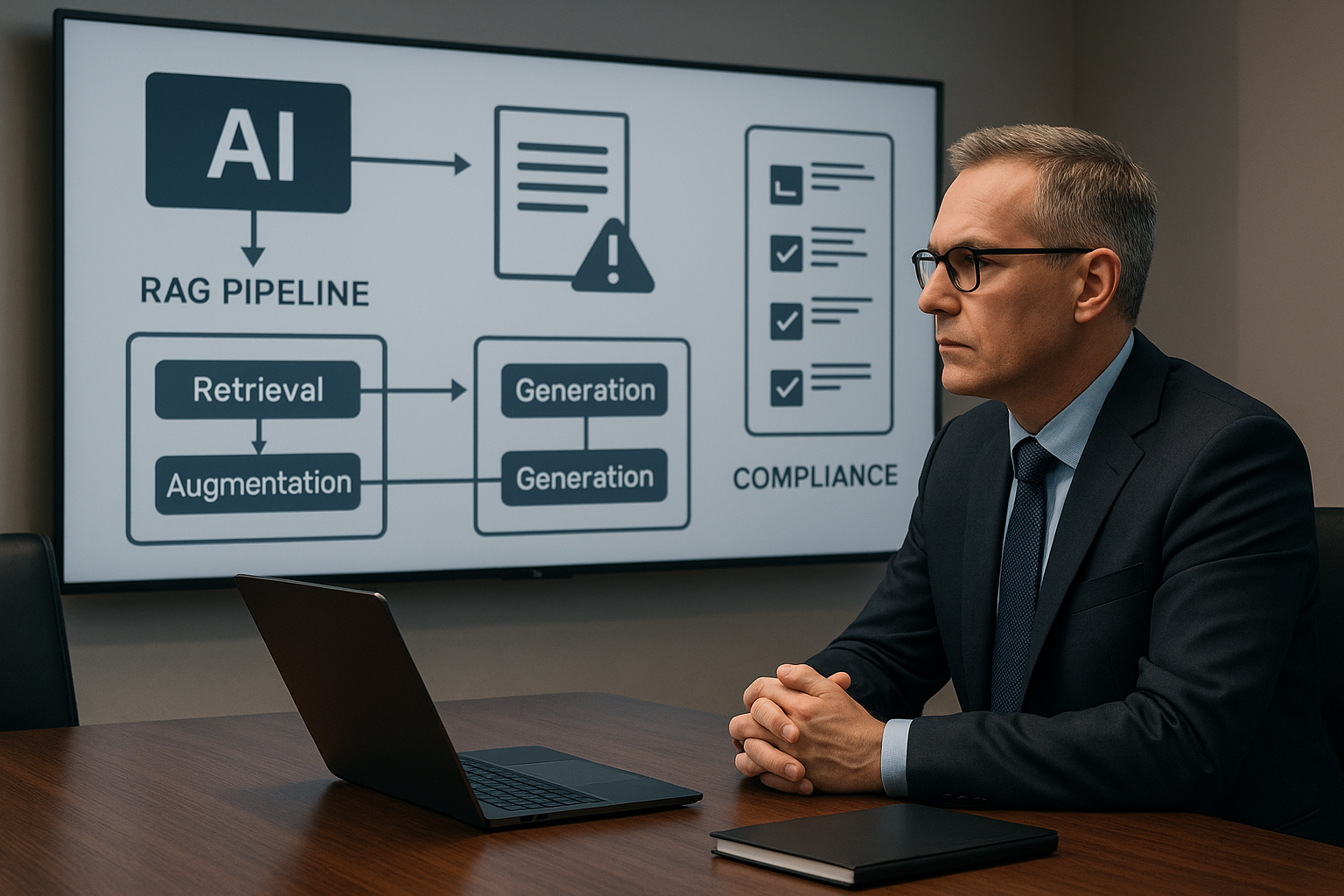

RAG with redaction: Pulling from the right data, safely

Retrieval-augmented generation becomes safe and compliant only when the retrieval layer is engineered to respect privacy, sensitivity, and provenance. For RAG redaction financial services deployments, begin with data minimization: redact PII/PCI before indexing and store only the contextual passages needed for response generation. Redaction should be deterministic and logged so you can show what was removed and why.

Vector stores must be segmented by sensitivity and wired to role-based access controls. Treat customer-identifiable records and transaction history as high-sensitivity shards that require elevated approvals and additional audit trails. Prompt templates should explicitly instruct the model to answer only from retrieved passages and to include source anchors with every factual claim. This approach minimizes hallucination and ensures any model assertion can be traced to a known document or policy section.

Evaluation and QA: From ‘seems right’ to measurable quality

Operationalizing quality means institutionalizing rigorous testing. Build golden datasets with clear acceptance criteria—accuracy thresholds, coverage measures, and citation fidelity expectations. Define what “good enough” looks like for each use case and codify it so that developers and risk officers evaluate against the same yardstick.

Adversarial testing is equally important. Run jailbreak attempts and policy-violating prompts to surface vulnerabilities and harden refusal behaviors. Integrate these tests into a CI/CD pipeline so every prompt change triggers automated checks. That’s the essence of LLMOps in finance: continuous evaluation, telemetry capture, and human review gates for high-risk outputs. Keep a human-in-the-loop for any decision that materially affects customer funds, creditworthiness, or regulatory reporting.

High-ROI, low-risk use cases to start

Choose initial deployments that reduce cycle time and touch well-understood data sets. KYC/AML file summarization is a predictable first wave: models can extract and condense client onboarding documents, flag missing evidence, and provide source-cited summaries that speed analyst review. A compliance copilot that answers employee questions about policy and returns links to the exact policy sections lowers reliance on scarce compliance experts while maintaining an audit trail. Loan operations assistants that generate checklists, prioritize exceptions, and suggest routing reduce backlogs and accelerate decisioning without changing credit policy.

These applications are attractive because they provide measurable operational gains—handle-time reduction and backlog burn-down—while maintaining narrow scopes that are easier to validate and control.

Metrics that matter to CIOs and CROs

To secure continued investment, map prompt engineering outcomes to operational and risk KPIs. Track handle-time reduction and first-pass yield in the workflows you optimize; these are direct indicators of cost savings. Monitor error and escalation rates versus baseline to ensure model-assisted tasks do not increase downstream risk. For compliance and credit functions, time-to-approve for documents (loans, memos, remediation actions) is a powerful metric that executives understand.

Model incident avoidance should also be reported: near-miss events from adversarial tests, false-positive and false-negative rates for KYC alerts, and citation fidelity rates. These metrics feed into governance reviews and help you demonstrate that prompt engineering banking initiatives are improving outcomes while controlling exposure.

Implementation roadmap (60–90 days)

A pragmatic timeline lets you show value quickly and harden controls iteratively. Weeks 1–2 focus on use-case triage and policy-to-prompt translation. Assemble a cross-functional team—compliance, legal, ops, and engineering—to create golden sets and map acceptance criteria. Weeks 3–6 are about building: implement RAG with redaction, segment vector stores, and enforce role-based access. Simultaneously, stand up your evaluation harness and automate adversarial tests.

Between Weeks 7–12, pilot with risk sign-off, expand your prompt library based on feedback, and train super-users who become the organizational champions for consistent prompt usage. Throughout, keep stakeholders informed with metric-driven reports and an auditable log of prompt changes and evaluation results.

How we help: Strategy, automation, and development

Bringing this to production requires three capabilities: strategy that aligns use cases to model risk, automation to embed prompt rules into workflows, and development to build secure RAG pipelines and evaluation tooling. We help design a prioritized use-case portfolio and translate policy into reusable prompt templates, build redaction and segmented indexing pipelines, and implement LLMOps practices that include CI/CD, golden datasets, and continuous monitoring.

For banking CIOs planning their next moves, a focused prompt engineering banking program—anchored in genAI compliance principles, RAG redaction financial services techniques, and LLMOps in finance practices—delivers measurable efficiency gains with controlled risk. Start narrow, measure rigorously, and scale the behaviors that pass both operational tests and regulatory scrutiny.

Sign Up For Updates.