Across the healthcare industry, artificial intelligence is poised to transform clinical workflows, unlock new diagnostic capabilities, and personalize patient care. For healthcare CIOs, every step toward AI adoption must be rooted in a foundation that balances cutting-edge innovation with the responsibility to protect sensitive patient data. Before the first machine learning model is trained, there are critical decisions about infrastructure, governance, and organizational structure that can make or break both compliance and clinical success. If you’re at the starting line of your healthcare AI journey, understanding these foundations is the key to building trustworthy, HIPAA-compliant AI platforms that live up to the sector’s mission to “do no harm.”

Why ‘Do No Harm’ Applies to Data Too

Patient safety doesn’t end at bedside care; it extends to every byte of patient information. The HIPAA Privacy and Security Rules lay out the exact requirements for safeguarding Protected Health Information (PHI), mandating controls around who can access this data, how it is transmitted, and how its use is audited. In the context of AI, ensuring compliance comes with additional stakes—models that are trained on non-compliant, improperly governed, or low-quality data can indirectly harm patients through misdiagnosis, bias, or unauthorized exposure of sensitive details.

Today’s healthcare CIOs operate in an unforgiving landscape: breaches of PHI can result in hefty fines, brand damage, and, more crucially, a loss of trust from your patient community. Recent industry studies reveal that trust itself is a competitive advantage—patients are more likely to engage with and remain loyal to systems that transparently and effectively safeguard their information. Building HIPAA-compliant AI, then, is not only about following regulations but also about strengthening your organization’s long-term reputation and care outcomes.

Building the Clinical Data Lake

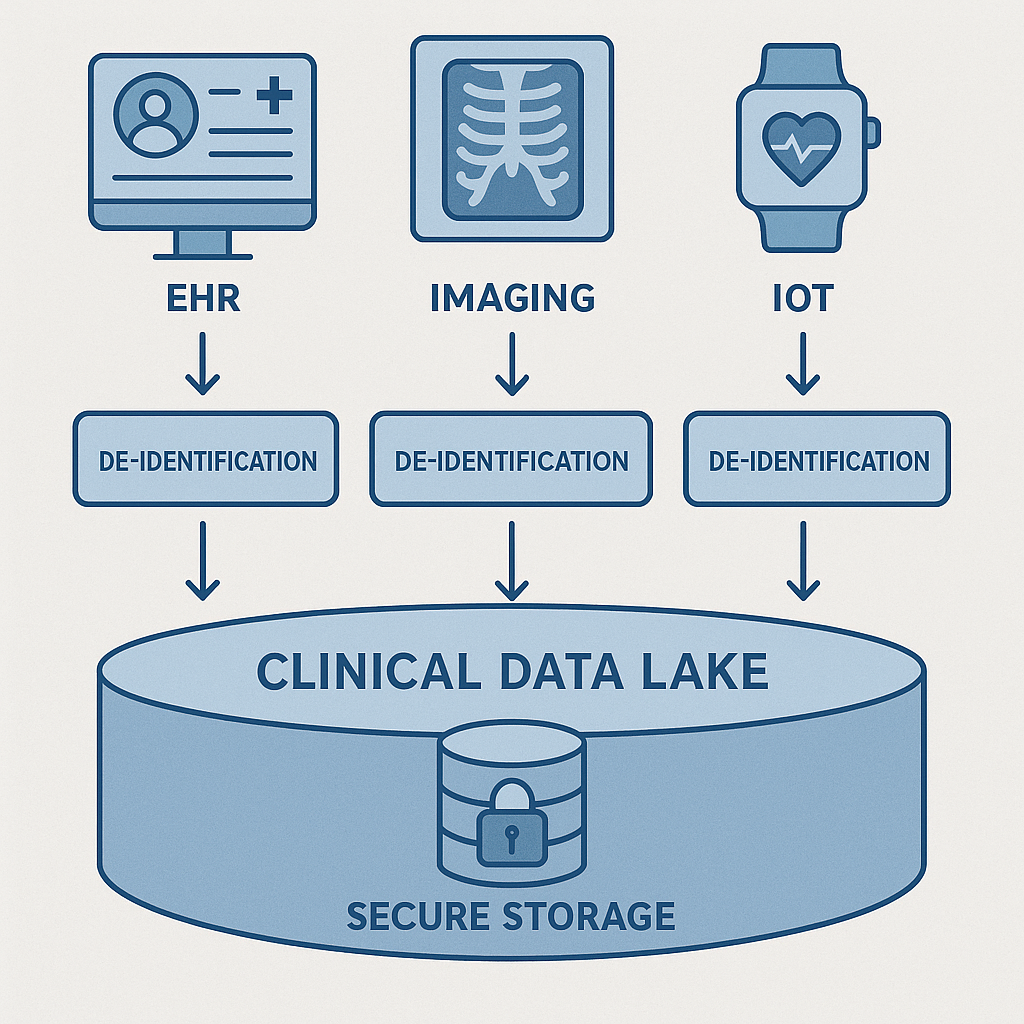

Effective healthcare AI begins with a robust foundation for storing and preparing data. Today’s hospital environments are awash in EHR entries, diagnostic imaging, real-time IoT device streams, and more. Unifying this information into a ‘clinical data lake’—a centralized repository for all raw and processed health data—is the essential first step for modern AI initiatives.

Interoperability standards such as FHIR (Fast Healthcare Interoperability Resources) provide the common language needed to structure and exchange clinical data across systems. However, before any data makes its way toward model training, rigorous de-identification pipelines are crucial. These pipelines must automatically remove or obfuscate patient identifiers, ensuring AI teams can access meaningful cohorts without ever jeopardizing privacy.

Maintaining compliance also means creating immutable audit logs at every touchpoint, tracing exactly who accessed what data, when, and for what purpose. A detailed, tamper-proof audit trail not only deters misuse but also allows for swift, precise action if access policies are ever questioned. This layer of traceability is what helps bridge the gap between regulatory assurance and operational practicality in the clinical data lake.

Selecting Cloud Services and On-Prem Components

Few healthcare organizations are completely cloud-native or fully on-premise today—most find their best path forward in hybrid architectures. Public cloud offerings can drive rapid innovation, but handling PHI in these environments demands HITRUST-certified cloud services. This level of certification is a baseline indicator that a vendor’s infrastructure meets the toughest standards for healthcare data protection.

Yet, not all workflows can leave the hospital premises, especially in mission-critical environments like operating rooms, intensive care units, or remote imaging facilities. Here, edge inference—where AI models run on secure, onsite hardware—ensures that real-time data analysis isn’t disrupted by cloud connectivity or latency challenges. Planning for such latency and resiliency is as important as choosing storage: the chain of care should never be interrupted by a network outage or cloud region downtime.

A successful HIPAA-compliant AI platform is often a careful orchestration of both cloud scalability and local control. For CIOs, this means rigorous vetting of cloud contracts, clear delineation of on-prem versus cloud workloads, and robust failover planning that keeps care delivery safe regardless of technical hiccups.

Foundational MLOps for Regulated Data

Even the best data lake and infrastructure are incomplete without sound MLOps (Machine Learning Operations) practices, especially in regulated healthcare environments. Monitoring, versioning, and documenting every model are not luxuries—they are core requirements. Each AI model should be accompanied by a model card: a comprehensive, living document that details the training data, intended use, known risks, and a specific PHI risk assessment. This transparency makes it easier to spot potential bias or misuse and provides traceability for regulators and internal stakeholders alike.

Continuous compliance scans must be built into the data and model workflow, automatically flagging any drift in access patterns, changes in data composition, or lapses in encryption or retention policies. It’s equally important to formalize an incident response workflow for AI. If a potential PHI breach occurs via a model or supporting data pipeline, your teams need a well-rehearsed playbook for containment, notification, remediation, and postmortem analysis—mirroring the rigor seen in clinical quality assurance programs.

Talent & Governance

No AI transformation succeeds on technology alone. Healthcare CIOs face a distinct challenge in assembling the right mix of talent and governance to ensure successful, responsible implementation. Data stewards and clinical informaticists play a foundational role in curating and reviewing datasets—not just for technical quality, but for relevance and safety in care delivery. Their partnership bridges the gap between raw data and real-world clinical nuance.

But responsibility does not stop at data management. Establishing an AI ethics board brings a multidisciplinary oversight to the table, incorporating perspectives from clinicians, legal, and patient advocates to regularly scrutinize how models are developed and deployed. This board ensures that AI is not just accurate, but also fair, transparent, and consistent with your organization’s mission and compliance responsibilities.

Lastly, the best technical safeguards are only as effective as the clinicians who use them. Training physicians, nurses, and technicians on AI literacy—covering not just the capabilities but also the limitations and risks—empowers them to safely interpret AI-driven recommendations and spot issues before they reach the patient.

As a healthcare CIO beginning the journey to a HIPAA-compliant AI foundation, the right groundwork pays dividends in both operational excellence and patient trust. By taking a rigorous, multi-disciplinary approach to data, infrastructure, and governance, your organization will be prepared to innovate with confidence, align with regulatory mandates, and deliver on the promise of safer, more intelligent care.

Sign Up For Updates.