What’s the deal with AR, anyway?

XR technology is widely touted as having infinite potential to create new worlds. You can design scenes with towering skyscrapers, alien spacecraft, magical effects, undersea expanses, futuristic machinery, really anything your heart desires. Within those spaces, you can fly, throw, slash, burn, freeze, enchant, record, create, draw and paint — any verb you can come up with. The only limit is your imagination!

Painting in VR with Mozilla’s A-Painter XR project. Credit: Mozilla 2018.

Sounds cool. What’s the problem?

Well, all of that is true — to a point. Despite all of our optimism about this AR and VR potential, we find that we are still bound by the practical limitations of the hardware. One of the biggest obstacles to creating immersive, interactive, action-packed, high-fidelity XR experiences is that the machines used to run them just don’t have the juice to render them well. Or, if they do, they’re either high-end devices that have a steep monetary barrier to entry, making them inaccessible, or too large to be portable and therefore inconducive to the free movement you would expect from an immersive experience.

That’s not to say that we can’t do cool things with our modern XR technology. We’re able to summon fashion shows in our living rooms, share cooperative creature-catching gaming experiences, alter our faces, clothing, and other aspects of our appearance, and much, much more. But it’s easy to imagine what we could do past our hardware limitations. Think of the depth, detail, and artistry boasted by popular open-world games on the market: The Elder Scrolls: Skyrim, The Legend of Zelda: Breath of the Wild, No Man’s Sky, and Red Dead Redemption 2, just to name a few. Now imagine superimposing those kinds of experiences against the real world, augmenting our reality with endless new content: fantastic flora and fauna wandering our streets, digital store facades that overlay real ones, information, and quests available to learn about at landmarks and local institutions.

Promotional screenshot from The Legend of Zelda: Breath of the Wild. Credit: Nintendo 2020.

There are many possibilities outside of the gaming and entertainment sphere, too. Imagine taking a walking tour through the Roman Coliseum or Machu Picchu or the Great Wall of China in your own home, with every stone in as fine detail as you might see if you were really there. Or imagine browsing through a car dealership or furniture retailer’s inventory with the option of seeing each item in precise, true-to-life proportion and detail in whatever space you choose.

We want to get to that level, obviously, but commercially available AR devices (i.e. typical smartphones) simply cannot support them. High-fidelity 3D models can be huge files with millions of faces and vertices. Large open worlds may have thousands of objects that require individual shadows, lighting, pathing, behavior, and other rendering considerations. User actions and interactions within a scene may require serious computational power. Without addressing these challenges and more, AR cannot live up to the wild potential of our imaginations.

So what can we do about it?

Enter render streaming. Realistically, modern AR devices can’t take care of all these issues…but desktop machines have more than enough horsepower. The proof is in the pudding: we see in the examples of open-world video games previously mentioned that we can very much create whole worlds from scratch and render them fluidly at high FPS rates. So let’s outsource the work!

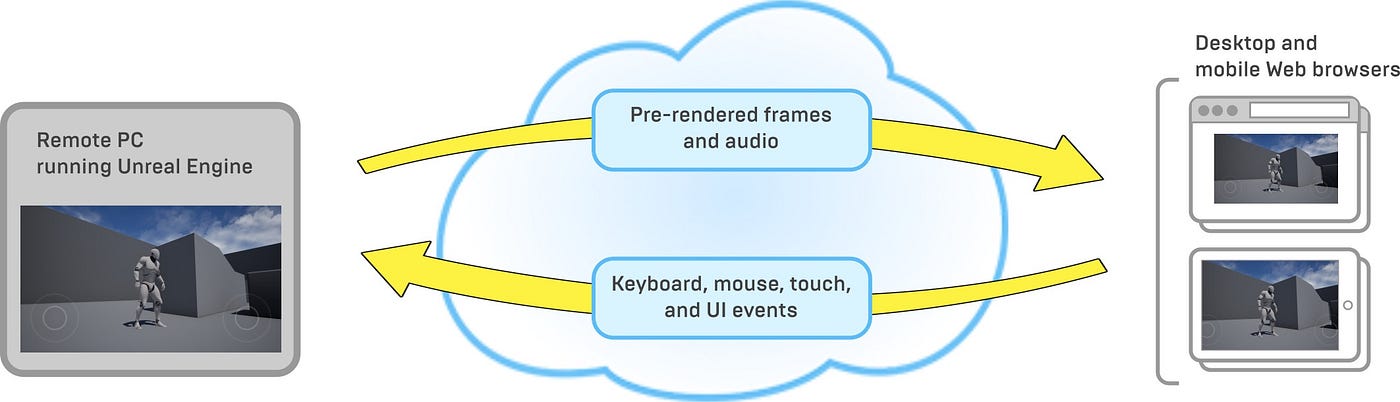

The process of render streaming starts with an XR application running on a machine with a much stronger GPU than a smartphone (at scale, a server, physical or cloud-based). Then, each processed, rendered frame of the experience, generated in real time, is sent to the display device (your smartphone). Any inputs from the display device, such as the camera feed and touch, gyroscope, and motion sensors, are transmitted back to the server to be processed in the XR application, then the next updated frame is sent to the display device. It’s like on-demand video streaming, with an extra layer of input from the viewing device. This frees the viewing device from actually having to handle the computational load. Its only responsibility now is to stream the graphics and audio, which modern devices are more than capable of doing efficiently. Even better, this streaming solution is browser-compatible through the WebRTC protocol, meaning that developers don’t need to worry about cross-platform compatibility, and users don’t need to download additional applications.

Diagram of render streaming process using Unreal Engine. Credit: Unreal Engine 2020.

There is just one problem: it takes time for input signals to move from the streaming device to the server, be processed, and have results be transmitted back. Nor is this a new challenge; we have long struggled with the same latency issue in modern multiplayer video games and other network applications. For render streaming to become an attractive, widespread option, 5G network connectivity and speeds will be necessary to reduce latency to tolerable levels.

Regardless, it would be wise for developers to get familiar with the technology. All the components are already at hand; not only is 5G availability increasing, but Unity and Unreal Engine have also released native support for render streaming, and cloud services catering to clients who want render streaming at scale are beginning to crop up. The future is already here — we just need to grab onto our screens and watch as the cloud renders the ride.

Sign Up For Updates.