Why government needs policy-aware prompting

Agency leaders today are grappling with high expectations for transparency, equity, and security while modernizing citizen-facing services. The rise of government AI prompting makes it possible to provide faster, more consistent responses, but it also introduces new risks when prompts and models operate without institutional guardrails. Policy-aware AI—prompting that is explicitly grounded in statutes, records-retention rules, privacy mandates, and accessibility requirements—lets agencies deliver predictable outcomes while meeting legal and ethical obligations.

For a CIO, the imperative is twofold: accelerate service improvements without undermining trust. Legal mandates around privacy and records retention mean that every automated interaction can create or reference an official record. Accessibility laws demand plain language and reading-level adaptations. Procurement and Authority to Operate (ATO) processes must be considered from the outset if a solution will touch sensitive data. Designing government AI prompting with policy baked in ensures the technology is an amplifier for stewardship, not an operational liability.

Use cases across the public service lifecycle

Once you adopt a policy-aware approach, the patterns repeat across many missions. FOIA automation AI can triage incoming requests, summarize responsive documents, and surface statutory exemptions while attaching citations that make decisions auditable. Eligibility pre-screening for benefits programs becomes an informed conversation when prompts embed program rules and required disclaimers to avoid creating misleading determinations.

Contact centers are another fertile area: knowledge assistants augmented with policy references can answer routine questions in multiple languages and adapt tone and reading level for callers with accessibility needs. Grants and rulemaking portals benefit from automated comment analysis that highlights common themes and flags procedural noncompliance; when the prompting layer enforces citation of the relevant statutes or regulatory sections, analysts gain immediate context and traceability.

Building a policy-aware context layer

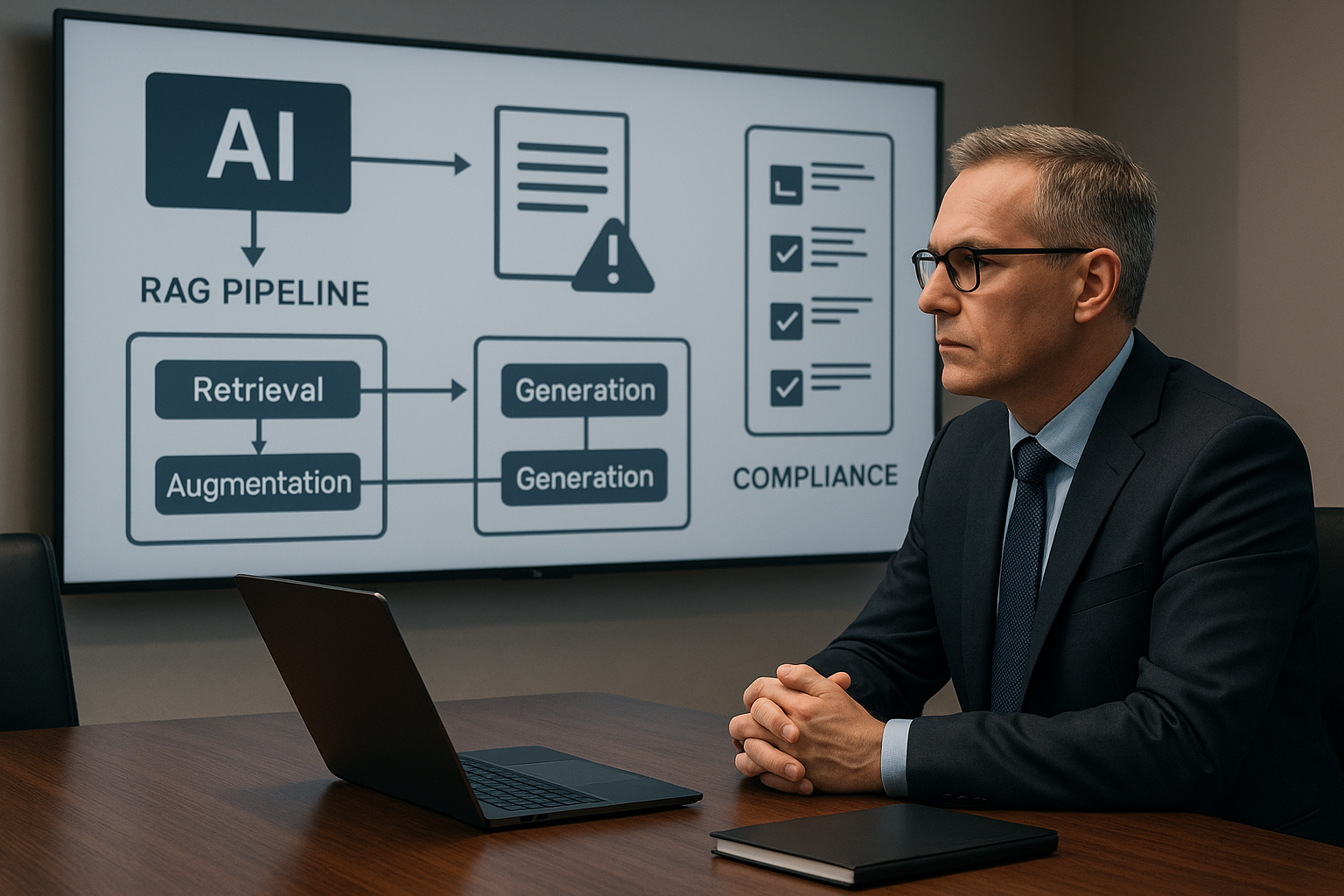

The practical core of policy-aware prompting is a context layer that binds model responses to authoritative sources. Retrieval-augmented generation (RAG) over statutes, regulations, agency playbooks, and approved FAQs ensures that prompts call relevant text into the context window rather than relying on model memorization. That same layer should implement policy-as-code: templates that automatically append mandated disclaimers, required appeals language, and citation formats.

Accessible communication needs to be explicit in templates. Prompt libraries should include cues for plain language conversion, specified reading level targets, and alternatives for screen readers or multilingual outputs. Treat these accessibility cues as policy parameters so that every response can be measured against compliance targets rather than left to ad hoc style choices.

Security, privacy, and equity guardrails

Public sector deployments carry distinct security and privacy obligations. Hosting choices aligned with FedRAMP or StateRAMP and clear data isolation designs must be part of procurement conversations early. Equally important is PII minimization: before construction of prompts, systems should redact or tokenize personally identifiable information and apply canonical identifiers that support linkage without exposing raw data to external models.

Equity considerations also require engineering controls. Bias testing against protected classes should be routine, with transparent refusal modes defined in the prompting layer when a request risks discriminatory inference. Those refusal modes should be explainable—showing why the system declined to answer and directing the citizen to a human reviewer—so trust is maintained and administrative remedies remain accessible.

Human oversight and records management

Trustworthy automation assumes humans remain in the loop where accountability matters. Design workflows with explicit human review checkpoints for determinations that affect entitlements or legal status. Every output that could be an official record should be logged immutably with citations to the statute or policy text used by the prompt. This enables defensible records retention and supports audits.

Model cards, decision logs, and explainability artifacts should be published where feasible so external stakeholders can understand capabilities and limitations. Open data practices—redacting personal data but exposing aggregated metrics and decision rationales—reinforce public trust and demonstrate adherence to public sector AI governance principles.

Measuring impact and building the business case

To secure funding and buy-in, define outcomes that matter to both the agency and the public. Measure service-level improvements such as backlog reduction, average time to response, and rates of first-contact resolution for contact centers. Track citizen satisfaction and accessibility metrics to ensure the automation is truly improving access to services, not simply shifting the burden.

Financially, quantify cost-to-serve reductions and the potential redeployment of staff time from repetitive tasks to higher-value activities like case adjudication or outreach. Frame these benefits alongside risk metrics—error rates, review backlogs, and audit findings—so decision-makers see a balanced view of operational gains and governance responsibilities.

Integration with workflow and case systems

AI outputs become useful when they connect to action. Design APIs that feed RAG summaries, citations, and recommended next steps into case management and document repositories so staff can act on automated insights without duplicating work. Where routine document assembly is appropriate, pair prompts with robotic process automation to populate forms, attach necessary disclaimers, and route items to the correct team.

Event-driven triggers tied to intake portals let the system scale: a submitted FOIA request can automatically kick off triage prompts that produce a prioritized worklist and draft responsive language for human review. Remember that integration needs to respect security zones; sensitive documents should remain in controlled repositories with only metadata or tokenized references used in the prompt context.

From pilot to enterprise scale

Successful scaling depends on repeatability. Establish sandbox pilots with clear governance and exit criteria that demonstrate measurable improvements and manageable risk. From those pilots, capture shared prompt libraries, reusable RAG indices, and pattern documentation so other teams can adopt proven configurations rather than reinventing the wheel.

Governance boards should oversee change management and vet shared libraries for compliance with policy-as-code standards. Training programs for staff must include not only tool usage but also how to interpret model outputs, escalate uncertainties, and document human reviews so institutional knowledge grows with deployment.

How we partner with agencies

We work with agencies to translate these practices into procurement-ready architectures and operational plans. Our services include policy-aware AI strategy and governance frameworks tailored to public sector constraints, prompt engineering and RAG buildouts that embed statutes and approved FAQs, and accessibility reviews to meet legal requirements. We also support procurement, ATO documentation, and hands-on training so teams can move from pilot to production with the controls auditors expect.

Policy-aware prompting is not a one-time project; it is an operating model that aligns technology with public service mandates. For CIOs and digital service leaders, the path forward is clear: start small with guarded pilots, codify policy in your prompting layer, and scale with governance, auditability, and transparency as your north stars. Doing so delivers faster, fairer, and more trustworthy services to the people your agency serves while keeping legal and ethical obligations front and center.