What Is Extended Reality?

Training a neural network to identify objects and remove backgrounds. Credit to Cyril Diagne, 2020.

ROSE decided to build a WebAR application for accessibility purposes and to take the burden off consumers. The WebAR experience is widely-supported, deeply interactive and highlights the unique details of KHAITE’s footwear designs in a way that offers endless creative freedom for the user. KHAITE shipped lookbooks that had QR codes embedded within the experience, made by Chandelier Creative, that when scanned take you to the AR experience where users can see the shoes to scale in their own homes. This allows consumers to tap whichever shoes they’d like to get a closer look at and place them in their homes. This allowed customers to get a feel for the items without being able to see them in person. This experience allowed KHAITE to create a visual experience that otherwise would only exist inside one of their showrooms.

ROSE decided to build a WebAR application for accessibility purposes and to take the burden off consumers. The WebAR experience is widely-supported, deeply interactive and highlights the unique details of KHAITE’s footwear designs in a way that offers endless creative freedom for the user. KHAITE shipped lookbooks that had QR codes embedded within the experience, made by Chandelier Creative, that when scanned take you to the AR experience where users can see the shoes to scale in their own homes. This allows consumers to tap whichever shoes they’d like to get a closer look at and place them in their homes. This allowed customers to get a feel for the items without being able to see them in person. This experience allowed KHAITE to create a visual experience that otherwise would only exist inside one of their showrooms.  In the second iteration of the experience, for KHAITE’s pre-fall 2021 collection, ROSE expanded the experience to include models rendered in augmented reality, allowing for users to be able to see the clothing in the way it was meant to be seen. While still using WebAR, this second experience utilized green screen video to build a full runway show with models wearing the new line as they walk up and down whatever environment the user chooses.

In the second iteration of the experience, for KHAITE’s pre-fall 2021 collection, ROSE expanded the experience to include models rendered in augmented reality, allowing for users to be able to see the clothing in the way it was meant to be seen. While still using WebAR, this second experience utilized green screen video to build a full runway show with models wearing the new line as they walk up and down whatever environment the user chooses.

Amid a global pandemic the solutions to some of our most basic problems need some creativity. With COVID’s continued presence in our lives, social distancing may have to continue into a time that is usually filled with parties, family gatherings and holiday festivities. People will be looking for ways to make new traditions, and to connect with their loved ones from afar.

Patrón needed a way to help customers connect despite holiday plans shifting across the country, while also maintaining their brand narrative. We worked with Patrón to create a first-of-its-kind digital wrapping as a special gift this holiday season, and beyond, to solve this specific problem. This experience provides a sentimental and original take on gifting alcohol as well as gives customers first-hand experience not just using augmented reality, but harnessing it to make something themselves.

Gifters of Patrón can use a microsite developed by ROSE to create a custom wrapping including a photo, text, and stickers that will transform into a 360-degree augmented reality (AR) gift wrapping around their Patrón bottle. This gives customers a chance to use this emerging technology in a new way that hasn’t been available in retail before.

“With COVID-19 impacting most celebrations this holiday season, we wanted to give customers a way to continue to celebrate with each other while social distancing,” Nicole Riemer, the art director on the project said. “By creating a custom wrapping, customers can take the act of gifting alcohol from an easy to a thoughtful one. During a time when you might not be able to gift in person, creating a custom wrapping with photos, stickers, and text provides that personal touch that is missing from not being able to gift it in person.”

Using WebGL in both 2D and 3D allows users to see their content change between dimensions in real time. Gifters can then use built-in recording and sharing technology to share the gift with the recipient as well as on social media.

By providing customers the ability to customize their gift of Patrón for both different occasions and gift recipients, we are showing them that Patrón isn’t the “mass brand” they think it is. This virtual gift allows distance to not be a barrier in creating something thoughtful that nurtures customers’ need for growing and maintaining their relationships.“Creating these designs digitally allows for the process to be instantaneous and affordable, rather than waiting for something to get engraved or physically customized, without losing the ability to share that someone is thinking of you on social media,” Riemer said.

Using augmented reality for this experience had several advantages. The most obvious one being that this experience provides a sentimental gift without having to enter a store or be in the same physical space as the recipient — helping maintain social distancing amid the pandemic. Additionally, augmented reality provides a way for users to generate their own content while maintaining the PATRÓN brand.

“The challenge with AR has always been figuring out how we can take new dimensions and connect them to the ones we’re familiar with in creative, expressive, and helpful ways,” Eric Liang, front-end/AR engineer on the project said. “The AR experiences that ROSE has previously created have each addressed that challenge by taking something important to us — something unseen or out of the ordinary that we wanted to showcase — and constructing it in the user’s world. This time, we’re handing the reins to the user. In this new collaboration, we’re letting users create and realize something that’s uniquely their own.”

Harnessing the power of AR will bring all the holiday cheer customers could be missing into the palm of their hand and inside their home — connecting people who want to be together this holiday. Additionally, PATRÓN has a history of creating limited-run packaging and bottles and this experience offers customers peak exclusivity with the ability to customize every individual bottle they purchase, so the virtual expansion of exclusive boxes was a natural progression for the brand.

In designing this web application, we identified two different types of users. As Patrón’s target demographic for this experience is 21–35, we were less concerned with the technological literacy of the user. Additionally, since this started as a concept that would be mainly pushed through social media, we were bound to attract younger users that would already be at least slightly familiar with augmented reality from exposure through SnapChat and Instagram. After determining this demographic information for our target user, the next question was what a user would want to create when using this tool. This led us to determining the following use cases:

Creator 1: The user that wants to create a really thoughtful collage that they want the recipient to see that they spent time on. They expect that their gift will be shown to others and potentially shared on social media in a similar fashion to birthday posts.

Creator 2: The user that is looking to create a quick gift that still wows the intended recipient. They want to expend minimal effort, but get the same praise and reaction as someone who spends a lot of time on their creation.

In order to satisfy the need for a quick gift, we created quick “themes” that someone can choose from at the start of the experience that allows them to upload a single photo and have created a designed bottle in 5 clicks (including previewing their design). For those that want to spend more time on their creation, we provide the ability to start from scratch and choose the content that goes on every side of the bottle.

In choosing the predetermined content that users can apply to their digital bottles, we focused on a few things. The first was to choose assets that could be used for multiple occasions, holidays, and were non-denominational. The second was to underscore the socially distant benefit of this gift and continue to have people drink responsibly even when gatherings are not encouraged. The third was to make sure that the assets could be used in many combinations and still create a wrapping that looks high end.

Once we determined the user experience and the content types that could be placed on the wrappings, we had to find a way to map their content to a 3D bottle in real time, to show the user their creation on this model before sending augmented reality link to their recipient, and then ultimately render each individual experience in augmented reality.

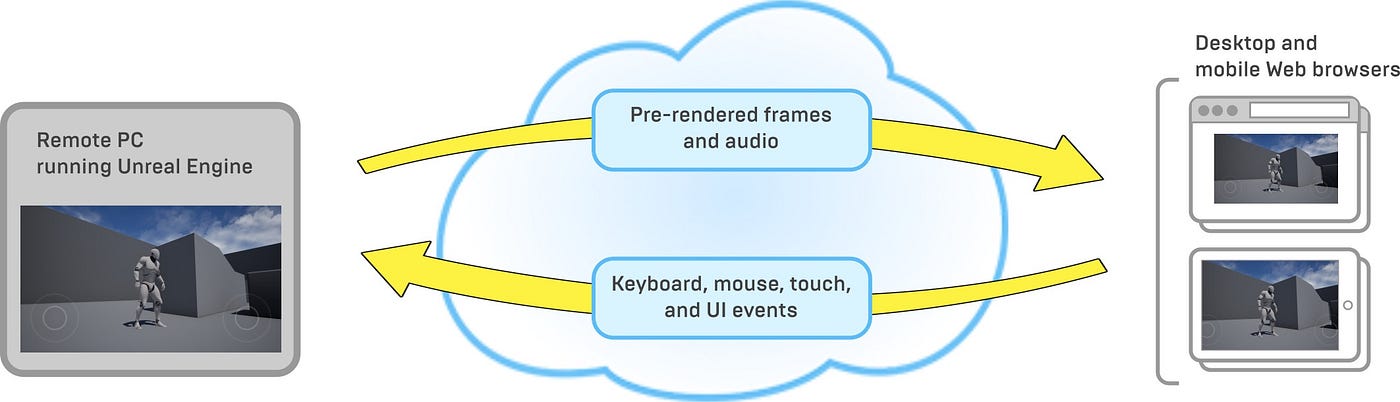

The technical inspiration for this experience began in an understanding of how WebXR, the implementation of augmented reality in a web browser, operates. WebXR is the conceptual model of everything that exists in an extended reality scene: where each virtual object is, where light is coming from, where the “camera” stands and observes, how the user interacts and changes all of these things, and so on. Imagine closing your eyes and understanding where everything around you in the room is: your desk, the floor, a lamp, rays of sunlight coming through a window, even your own hands. Now open your eyes and actually observe those things. That’s what WebGL does. WebGL is the graphics engine that takes the theoretical model processed by WebXR and paints it on a screen, rendering the virtual existence of matter and light into visibility.

While we wanted to capture the same magic of seeing something you create exist in 3D space, it was important that it would be accessible to everyone, both in terms of the technology and creativity. We wanted it to be usable from an everyday mobile device, without the need for expensive VR technology. We also didn’t want to require the user to be a painter, have an empty warehouse to dance around with VR goggles on, or have an intricate understanding of 3D sculpture or set design to maximize the reward of the experience.

There were a lot of moving parts that needed to be addressed. There needed to be a simple, intuitive interface for the user to customize their design and we needed to apply the design to a 3D model composed of a number of different materials and textures, from soft cork to clear pebbled glass to shiny metallic gift wrap. The experience needed to show that customized bottle back to the user in an interactive, attention-grabbing 3D experience. And finally, we needed to be able to scale the experience for a mass marketing campaign, which meant preparing for a large number of concurrent users with different devices and intents. We settled on technologies to address each of these challenges: a React/HTML Canvas microsite to design the wrapping, an 8th Wall/A-FRAME experience to view it, and a serverless API backend with cloud storage to support scale.

The next step was to source a 3D model of the bottle and we worked with a 3D artist and modeller and iterated over the model until each detail was as accurate as possible, and then continued to optimize our renders.This involved adjusting lighting through trial and error until we found the best setup to illuminate the bottle and make the glass and its reflectiveness as lifelike as possible, as well as customizing the physical material shaders for each node of the finalized model: the cork, the ribbon, the glass, the liquid, and the wrapping.

3D model renderings of the Silver Patrón bottle.

3D model renderings of the Silver Patrón bottle.Later on, we also realized that we needed a dynamic approach to the wrapping’s transparency. If the user chose to lay their graphics directly over the glass without using a background, those stickers, photos, and text would need to be opaque while leaving the glass transparent. The answer was taking the texture maps we generated with each user-created design and filtered them into black and white, effortlessly serving double duty as alpha maps to control transparency.

Example of an alpha map.

Example of an alpha map.While the experience would be accessible to everyone, we wanted those who had a Patrón bottle handy to be able to integrate it into the experience. It’s not yet feasible to use a real-life bottle of Patrón to anchor the experience, so we looked outside of the box — and settled on the actual box that each bottle of Patrón comes in. This gave us the opportunity to leverage 8th Wall’s image target feature, using Patrón bottle image on the side of each box to trigger the dramatic emergence of the virtual bottle from the physical box.

Built to share on social, this augmented reality experience allows for recording within the WebAR experience.

Built to share on social, this augmented reality experience allows for recording within the WebAR experience.Those without a box can watch the bottle appear on the plane they have placed it on in the experience. Adding some typical controls like pinch to zoom and finger rotation made it easy for the user to examine the bottle and the details of the design, and we added in 8th Wall’s Media Recorder capability to further boost the shareability of the experience.

As companies look ahead to a greener and more sustainable future, the concept of virtual wrapping and virtual packaging is likely to expand. As augmented reality moves from an emerging technology to an adopted one, user-generated AR content will take center stage, and experiences like this one will enable every day users to create using AR technology. As all industries grapple with how to stay competitive, and stay afloat, innovation is the answer to moving forward. This is the tip of the iceberg when it comes to what augmented reality can accomplish.

We are excited to continue innovating and bringing projects like these to life. We believe anyone can innovate and that process is vital amid the current economic landscape. Our passion for emerging technologies and augmented reality is immense and our work will only continue to reflect that. We’re looking forward to sharing more soon.

Ashley Nelson: Concept and Strategy, UX Copywriter

Eric Liang: Front-end/AR engineer

Eugene Park: Experience Design

Leonardo Malave: Back-end/AR engineer

Marie Liao: QA Engineer

Nicole Riemer: Concept and Strategy, Art Direction, and Experience Design

Yolan Baker: Project Manager

As a black-owned business, the current state of the world has changed ROSE’s daily motions as a company. We view ROSE as a vehicle for improving the world, both in how we support each other internally, and the impact of the products we bring to life. We also acknowledge that as a Black-owned tech firm, we have an innate privilege with our platform. We have the ability to create change through technology, and with that privilege comes a deeply-rooted responsibility. Bail Out Network was our way of assisting in the fight for Black equality, without distracting from the injustices currently happening.

After the death of George Floyd sparked protests across the world, we found ourselves in conversations with the entire staff about how we could make a positive impact. We quickly saw the systematic arrest of protesters around the country, and the flood of donations to community bail funds that have been integral to fighting police overreach for decades.

We wanted to make locating these funds, and their donation portals, as easy as possible as the police violence became clearly visible on a daily basis. So we scoured the internet looking for every bail fund we could find, and any lawyers or law firms that vocalized their willingness to represent those arrested for protesting free of charge. The website was launched in under 24 hours, on June 2, as a rapid response to the grossly visible police violence that was being seen across the country.

This project started as a way for us to collect bail funds in one place, for people looking to lend a hand in their communities as police began systematically targeting protesters. As more time passed, we wanted to expand how Bail Out Network could be utilized as a resource in the fight against police brutality and equity for Black and brown bodies.

The site now has a collection of resources that are dedicated to helping the most marginalized communities — focusing on the Black Trans community, Black LGBTQ+ community, Black youth, Black incarcerated people and other groups that lack protection from this country’s institutions. The site remains open for submissions, and if people come to the site and want to submit new resources they can add them at any time.

We firmly believe, as should all people, that Black Lives Matter and the people protesting should not face punishment for doing what is right. As people continue to protest and fight for the destruction of systemic racism and demanding a complete overhaul of the United States policing system, they’re going to need more money, more resources, and more bodies to achieve these goals. We will continue to promote organizations that are protecting those who have been disenfranchised by the current political, economic, and social structures that exist within the fabric of the United States. This database will be updated as more resources become available.

The Team

Launched in June 2020, the idea for this project built upon Rose Digital’s history of using technology for good in times of public crisis (see also, Help or Get Help). Ashley Nelson, copywriter, originated the idea and identified the need within the current environment to connect those protesting with legal aid and easy access to resources. Nicole Riemer, art director, created the visual direction and UX of the site with Ashley writing the copy.

Bail Out Network will continue to consolidate resources that anyone can use to access resources helping the Black Lives Matter movement. For more information on ROSE, please visit builtbyrose.co.

Believe it or not, a few short months ago the main event dominating the news cycle wasn’t coronavirus, but the Presidential election. The Democratic primaries were different from years past, and not just because the number of candidates running could fill a small football field. One thing that stood out to our team was the record spending that occurred this election cycle. Discussions began to swirl around campaign finance specifically when Michael Bloomberg entered the race, funding his entire campaign with his personal fortune, and raising questions about what money should and shouldn’t buy while running for office. We began thinking about a way to contextualize the immensity of campaign spending through the language we speak best — technology. Those conversations and the desire to use technology to answer that question was the origin of Pay to Play. Due to primaries being postponed, and the race being narrowed down to single candidates from each party, we considered not releasing this experience.

However, with the new economic pressures on American families due to coronavirus and the current volatile international economy, we believed the relationship between money and politics was worth exploring. This project considers the disconnect between the monetary impact of the political process and the needs of everyday Americans.

The staggering amount of money spent by Democratic candidates in the 2020 election left us wondering how that money could have been spent on infrastructure and funding the platforms that those candidates had as part of their campaigns. We designed Pay to Play as a way to look back on the record amount of money spent by Democratic candidates that have ended their bids. We also included how much several Republican contenders in the 2016 presidential election spent on their campaigns as another comparison.

We designed this experience to visualize our internal discussions and the conversations happening in the U.S. during this tumultuous time, and in doing so we wanted to answer the question: “What else could we have done with that money?”

Pay to Play was developed using 8th Wall as the hosting platform. The only web specific AR toolkit, 8th Wall allows anyone with a mobile device and an internet connection to access the comparative experience. Users can compare campaign spending amounts from the top seven Democratic candidates who spent the most on their presidential run, as well as the top seven Republican candidates from the 2016 presidential election. The experience has different “common good” filters and each “common good” filter has been paired with a representative 3D object that will fill the space with the appropriate scaled number of objects. With each selection, the data will simultaneously update in the upper left corner.

Using augmented reality for data visualization allows for emotional reactions from the user. This experience showcases the immensity of campaign spending by using cascading scaled objects that fill the users view, as though it could overflow from the screen at any moment. This experience was created using 8th Wall, which meant decreasing file size and the number of objects rendered is important for optimizing load time. To speed up load time and allow for easier comparison, the number of objects was scaled. While AR can make data more manageable for users, it can also create emotional connections through hands-on participation with the product.

We found that the best way to offer an immersive extended reality experience, while still offering relevant information and options to a user, is to combine the XR portion with a heads-up display that lies on top without obstructing the view. As such, this project could immediately be divided into two parts: building the HUD and coding the 3D model portion. We used A-Frame, a 3D framework built on Three.js and HTML, to bridge the gap. By representing our 3D assets and behaviors as HTML, we were free to create our HUD in pure HTML and have it communicate and interact seamlessly with the A-Frame components.

We found that much of the challenge of this project was using AR in a way that was accessible to as many people as possible while still maintaining the core identity of the project — using numerical scale as a way to evoke a reaction from the user. Rendering any 3D model in a web browser can be an expensive operation. Rendering thousands of them would tax a smartphone’s hardware to the point of unusability. We ended up approaching this by leaning into the idea of scale: we didn’t need exacting detail if the idea was to overwhelm the user with a huge pile of items; we just needed enough to make it clear what each item was. So we selected simple models with fewer polygons, decimated their numbers of faces as low as we could, and reduced the resolution on their textures to minimize file size. The end result worked out — we had piles of apples that were clearly recognizable and deeply satisfying to watch cascade down from the sky.

Additional challenges came from the technologies we used to build the experience itself. Web AR platforms advance every day, but there are still severe limitations to their capabilities. For example, 8th Wall, the platform on which this experience runs, offers surface occlusion capabilities only for its Unity integration into native apps. For browser-based experiences that don’t yet have access to that plane detection technology, we have to emulate a floor by placing a vast invisible sheet at a defined distance below the camera. The distance to the “floor” is not dynamic and doesn’t change whether the user is sitting or standing, resulting in an imperfect representation of reality. This process only makes us more excited to see the next steps web AR will take, as the technology continues to improve and provide us with new and even more compelling ways to augment our reality.

The political process is often a complicated and convoluted one, and accessing data on campaign finance can be overwhelming. Conceptualizing how much candidates spend on their campaigns shows the immensity of American politics. By using AR, it becomes easier to visualize the power that the people funding these campaigns have, and raises real questions about the possibility of sweeping change if these funds were made available.

Jordan Long: Concept and Strategy

Nicole Riemer: Art Direction and Experience Design

Eric Liang: Experience Design and Development